Name the Blame Game: An AI Liability Primer for Healthcare (Part 1)

- Jeremy Hessing-Lewis

- Aug 1

- 3 min read

Updated: Aug 11

This is the first of a two-part series.

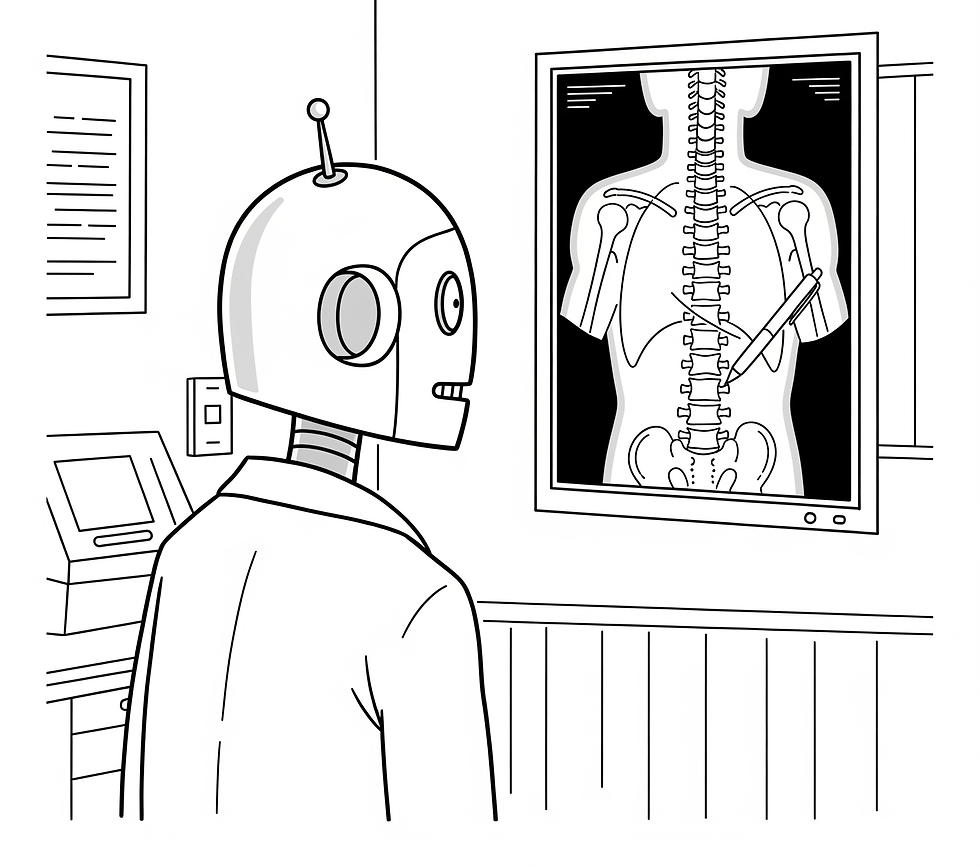

As AI rapidly integrates into healthcare, a critical question emerges: who is to blame when things go wrong? This post summarizes the primary sources of liability for the use of generative AI models in healthcare. Professional negligence and product liability remain the dominant sources of risk exposure. While the legal landscape of the blame game will evolve with novel arguments and emerging case law, there are already well-established steps organizations can take to mitigate such risks. The first post will describe the context while the second post will describe the types of legal claims.

First, Consider Doing Nothing

The first option of risk management reflects our bias towards inaction: do nothing. The reality of healthcare is that almost nobody gets fired for preserving the status quo. Even where economic and care delivery trends are headed towards a demographic cliff, healthcare innovation is exceptionally conservative by design. These are people’s lives at stake.

The result is that we have optimized our healthcare systems to prefer moral liability by omission rather than financial liability by commission. Nobody gets fired if a significant population is denied access to care. In contrast, there is strict accountability if you are so bold as to pursue an innovative healthcare delivery implementation where mistakes are made. For example, a clinic that foregoes an AI diagnostic tool might miss early detection of a disease, leading to worse patient outcomes. How many patients’ lives could be saved prior to the tool becoming so essential that health providers are expected to adopt the technology as part of their standard of care?

The starting place for understanding generative AI liability is to force healthcare organizations to perform a risk assessment on doing nothing. Let’s just acknowledge what will happen if the organization opts not to pursue innovations in care (not just algorithmic). We need to ensure that there is just as much accountability for inaction.

AI in Healthcare is Not New

From CT Scans to Ambient Scribes

Despite the current excitement regarding the introduction of large language models (LLMs) within healthcare, AI has been quietly supporting healthcare professionals for decades. In radiology, the first research was performed on mammography in 1992 and the first FDA-approved algorithm was made available in 2017. 2017 is the same year that Google researchers published the landmark “transformers” paper Attention is All You Need, describing the modern training of LLMs. OpenAI subsequently introduced ChatGPT using this training architecture in November 2022.

There are distinguishing characteristics of the post-2022 adoption of AI in healthcare:

Generative AI models are now widely accessible by consumers;

Many generative AI tools do not necessitate human-in-the-loop from healthcare providers;

AI models are probabilistic and may not produce consistently replicable outputs;

The scope of the healthcare-related use cases is increasingly broad ranging from exceptionally specific diagnostic tools to general purpose ambient scribes; and

They are generally not being developed or marketed as regulated medical devices.

These changes have contributed to increased uncertainty with regards to the adoption of such tools with regards to legal risks.

Academic Literature

The risks created by generative AI tools within healthcare are well documented in the academic literature. Irrespective the healthcare context, the following themes are prevalent:

Safety - False-positives;

Safety - False-negatives;

Safety - Replicability;

Safety - Impairment of professional judgment;

Bias - racial and other demographic disparities;

Privacy and informed consent; and

Security risks.

These can be simplified into two types of risks:

data protection risks; and

clinical safety risks.

While the former is consistent with all software in healthcare, uncertainty with the latter goes to the core of healthcare innovation: what happens when the software makes a mistake?

Types of Liability

Lawyers need to fit any legal claim within a “cause of action.” This is the legal basis for bringing a lawsuit. This is where we need to name the blame game. Part two of this series will summarize the specific sources of liability.