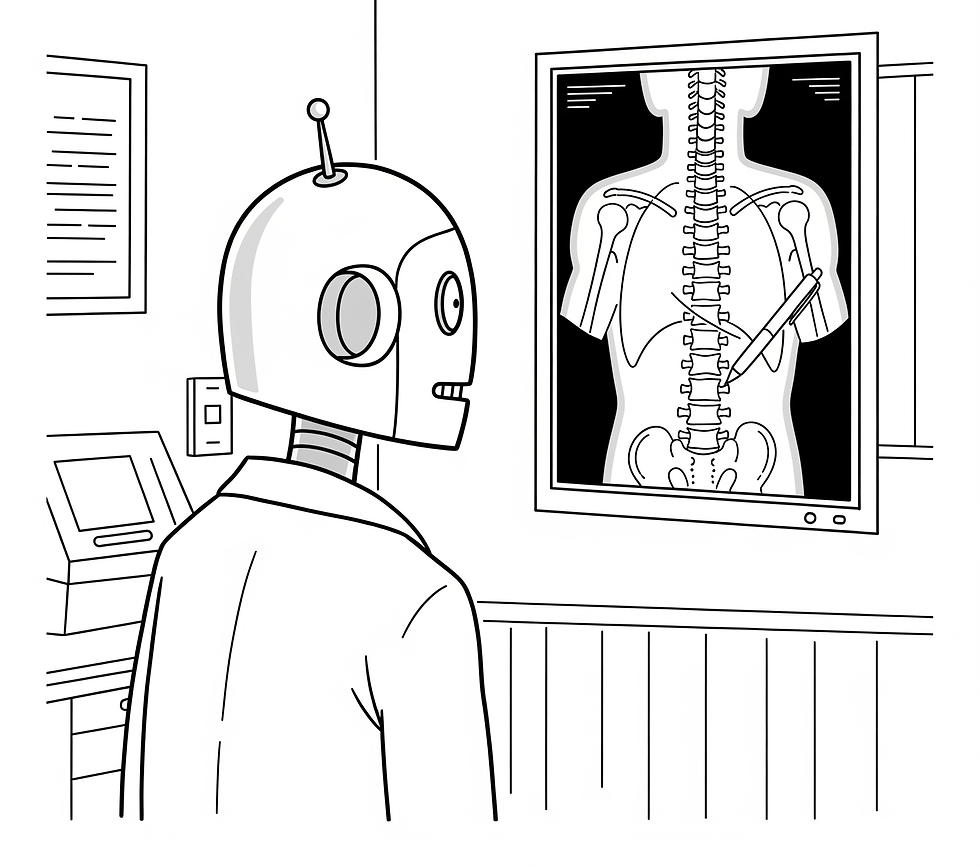

Name the Blame Game: An AI Liability Primer for Healthcare (Part 2)

- Jeremy Hessing-Lewis

- Aug 11

- 5 min read

Updated: Aug 12

This is the second of a two-part series.

The first post described the context for AI tooling in healthcare from a risk management perspective. This second post summarizes the types of legal claims that may be used in the event that someone suffers harm or loss as a result of the use of an AI service. Notwithstanding the perceived novelty of the technology, the categories of liability are well established and offer widely accepted approaches to mitigating such risks.

Professional Negligence - Medical Malpractice

Where a healthcare provider fails to meet the accepted standard of care, resulting in patient harm, they may be liable for professional negligence. Use of AI does not replace clinical judgment, and providers are expected to critically assess AI outputs as part of their duty of care. Negligence generally follows a reasonableness standard that evolves with the context of clinical standards.

While medical malpractice is attributed to individual professionals, the principle of vicarious liability can make an employer or supervising organization liable for resulting harm, even if the organization itself was not directly at fault.

Product Liability

Product liability is the legal responsibility of manufacturers, distributors, or sellers for harm caused by defective products. A product may be considered defective due to flawed design, manufacturing errors, or inadequate warnings or instructions. If a healthcare AI tool causes injury because of such a defect, the company behind it may be held strictly liable, even without proof of negligence. With complex supply chains, product liability risks may involve many parties.

Regulatory Liability

Regulatory liability arises when an individual or organization fails to comply with laws or regulations enforced by government agencies. In healthcare, this can include breaches of data protection laws or failure to obtain required approvals for AI tools classified as Software as a Medical Device (SaMD). Consequences may include fines, sanctions, loss of licensure, or mandatory product recalls. For example, prescriptive privacy legislation may impose punitive fines on an organization’s worldwide revenues following a data breach.

Contractual Liability

Contractual liability arises when a party fails to meet its obligations under a legally binding agreement. This can include breaches of service level agreements, data handling provisions, or performance guarantees related to the AI-powered services. If the software underperforms, causes harm, or fails to comply with contractual terms, the vendor or implementing party may be liable for damages or other remedies specified in the contract.

Intellectual Property Infringement

With software being primarily protected through copyright law, generative AI introduces two significant infringement-related risks: the training of AI models and the model itself. Whether the output generated is deserving of copyright protection is a separate matter. Globally, these questions remain before the courts in many jurisdictions. Under consideration is whether the training itself infringes the underlying copyright of the original work and if so, whether there are applicable exceptions like fair dealing or fair use.

Challenges Applying Existing Liability Categories

There are meaningful challenges in adapting our liability models for generative AI, but they tend to be fact-specific rather than uncertainty with the law itself:

Unilateral Click-Through Terms: The developers of foundational AI models hold dominant contracting power and impose one-sided, non-negotiable contractual terms that severely restrict any recourse through product liability claims.

Opacity (Black Box Problem): The complex, often non-transparent nature of AI algorithms makes it difficult to understand why an AI made a particular decision, complicating efforts to prove a defect or negligence.

Causation: Establishing a direct causal link between an AI's error and patient harm can be challenging, especially when multiple factors are involved in a patient's care.

Evolving Standard of Care: As AI becomes more integrated into healthcare, the "standard of care" for medical professionals will evolve, potentially requiring them to use or at least understand AI tools.

Regulatory Landscape: The regulatory environment for AI in healthcare is still developing, creating uncertainty around pre-market requirements and post-market responsibilities.

These challenges are already prevalent in litigation relating to software. Generative AI introduces challenges of degree and not of kind.

Risk Mitigation

The above legal claims are nothing new. Both product liability and medical malpractice are deeply rooted in healthcare — checklists, redundancies, disclaimers, accreditations, sterile packaging, and single-use devices are all symptoms of these risks. If the types of claims are well-established, there are also long-standing mitigating steps that individuals and organizations can adopt in order to limit their exposure.

Safety and Quality Management

Patient safety must always be the priority. With the exception of certain regulatory risks, the sources of liability described above need to prove damages. Clinical teams are the front-lines of risk management and they need to lead assessment processes for any generative AI tooling. These safeguards may be documented through a rigorous Quality Management System.

Adequate validation and verification processes must be applied to ensure that the product or service meets its intended design. Training and supporting documentation will also provide clear instructions and help organizations limit their risks.

Due Diligence

Healthcare organizations are particularly vulnerable to third-party risk from any threat or disruption to the flow of goods and services. For AI-powered services, the supply chain is effectively real-time with minimal tolerance for unavailability or latency. Beyond validating the clinical effectiveness of their service, a robust third-party risk management program requires:

Privacy, security, and AI-governance assessments of third-party vendors prior to onboarding;

Recurring risk-based assessments; and

Strong contractual controls, including service level agreements.

Contractual Protections

Contractual measures should be paired with your organization’s due diligence. Data protection controls for your vendors should be equivalent or stronger to the protections maintained within your own organization. Reviewing third-party assurance documentation like SOC2 Type II reporting or ISO certifications go a long way to establishing that your organization has met the standard of care.

Indemnification language cal also be used to insulate the purchaser from certain risks. Indemnification for intellectual property infringement is already a standard term in most software services agreements and should not require any material changes for use with generative AI.

Regulatory and Industry Standards

Emerging regulatory standards also support your approach to due diligence. Medical device regulations are being adapted to accommodate generative AI tooling and privacy legislation is being modernized to address AI governance risks. Industry groups are active both domestically and in a global capacity to help clarify minimum technical standards and establish consistent models for rapid adoption.

For their part, health regulatory colleges are busy providing guidance to their members in support of increased generative AI tooling. The emergence of ambient scribes is an excellent example of where self-regulatory organizations have helped support healthcare providers at scale.

Insurance

There is always insurance. Barring fraudulent or other criminal activities, insurance is intended to shield professionals and organizations from low likelihood risks. While the first line of risk mitigation will remain clinical safety, the last line is insurance policies. These policies exist specifically to share risk across a community of policy holders to protect against certain high impact events.

Now, Do Something

In summary, there are major risks with doing nothing in terms of healthcare innovation. There are also risks inherent with taking action, including the introduction of generative AI. The scale of economic and demographic challenges facing healthcare, irrespective the delivery model, necessitate a bias to action.

I see widespread effort to place organizations within the middle of the pack in terms of adoption. I encourage those stakeholders not to dwell on whether they are first or last, but whether the tool can be adopted safely, with risks tracked within the risk appetite of the organization. Proceed with caution, but proceed nonetheless.

The existing legal causes of action are well-established and should adequately allocate blame for the mistakes that may occur, much like the mistakes that already occur. There will be some combination of professional negligence and product liability. The imperative is not to avoid AI, but to responsibly embrace it through diligent risk assessment and robust mitigation strategies, ensuring patient safety remains paramount while unlocking AI's transformative potential. That’s how to save lives and win the blame game.

Learn more!

For organizations interested in learning more about nymble, reach out to us at info@nymble.health.

For individuals, check out this page and email us at enroll@nymble.health.